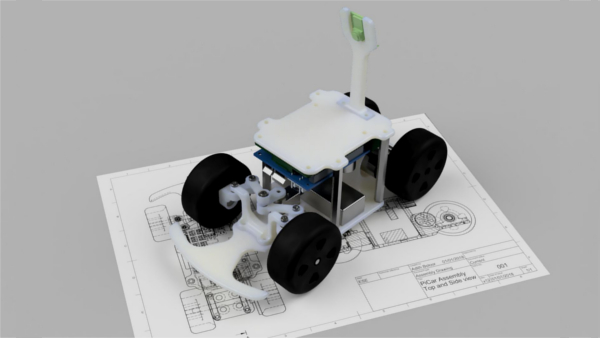

The ROS and Gazebo Robot uses well ROS (Robotic Operating System), Gazebo and Rviz to simulate a two-wheeled differential drive robot. Autonomous cars are the future and this project aimes to test some of the most important features of such cars, viz., navigation and collision detection. The goal is to implement the SLAM protocol for the PiCar project.

Project Details

- Made for: Personal Project

- Date: March, 2018

- Github: ROS and Gazebo Robot

Project Story

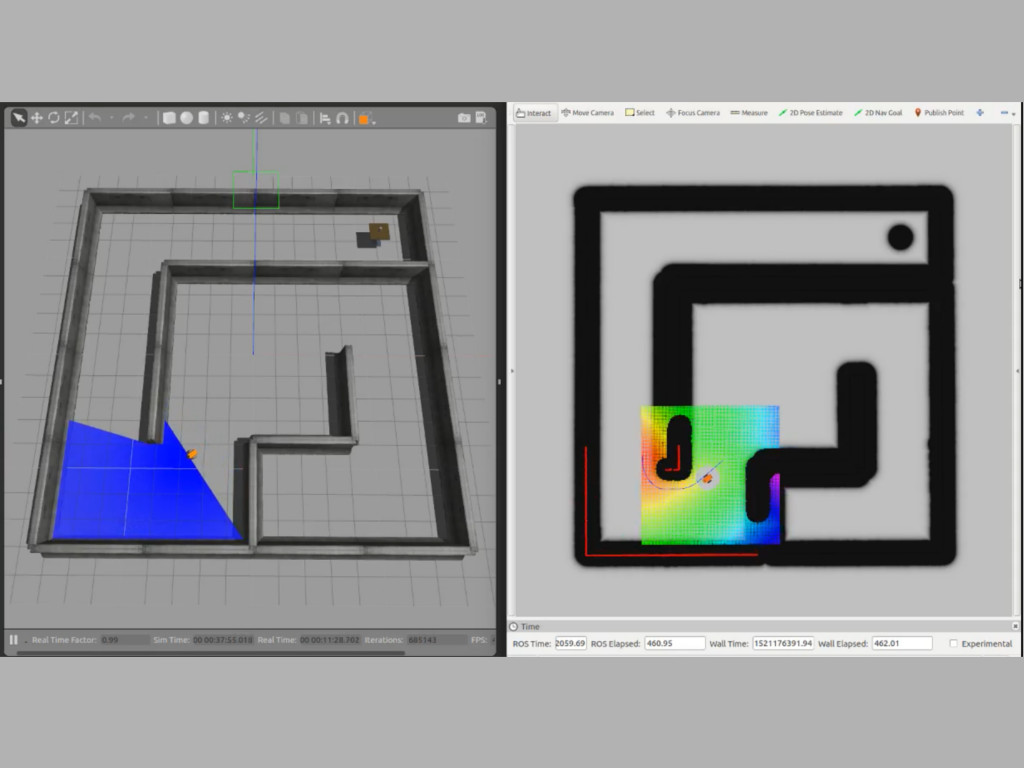

In the videos and the images, the left window is Gazebo which helps visualize the 3D simulation of the robot along with the hokudyne LIDAR sensor that can be seen as dense blue streaks, and the window on the right is the Rviz window which shows the robot moving along the trained map of the environment along with the ability to set navigation goals. The red lines are the walls that are detected by the LIDAR sensor. The thick black lines that overlay the red walls are the learned map of the setup. The robot tries to avoid the black regions. We can use Rviz's 2D Nav Goal to move from point A to B as seen in figures 1 and 3. The rainbow cloud map visualizes the total cost for navigation (red - towards goal; blue away from goal). This project took the help of http://moorerobots.com/blog/post/1 for implementing SLAM. If the following gifs don't load, use the embedded video below.

Fig. 1: Simulating SLAM for a simple maze (Speed: 10x Simulation Time)

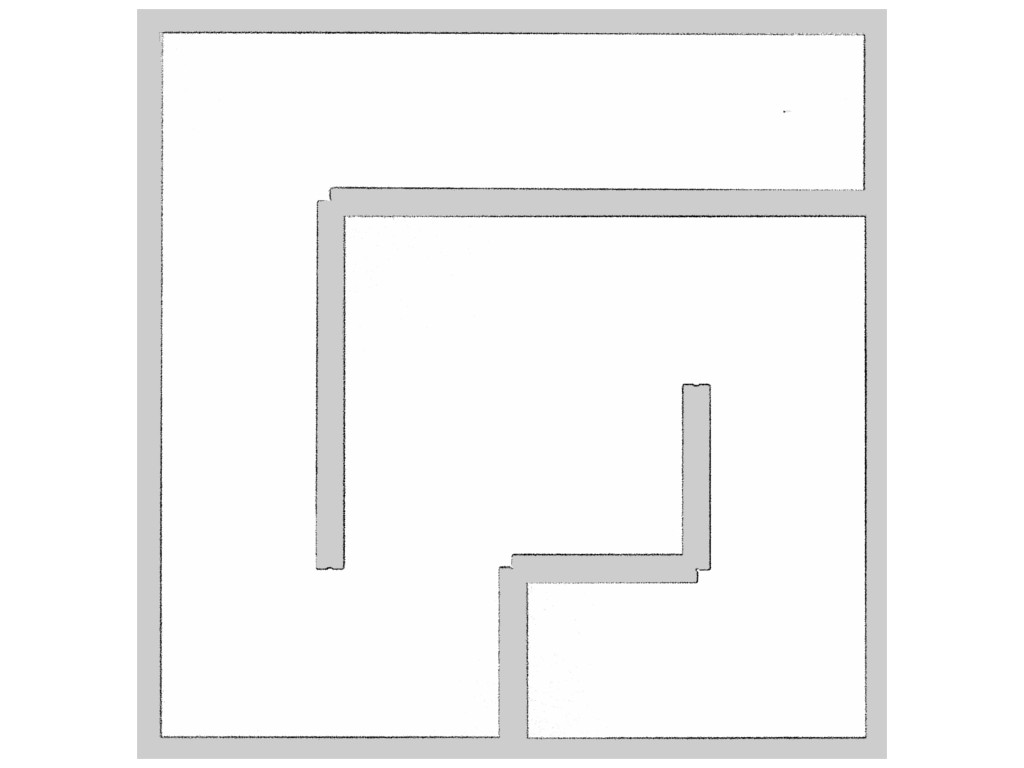

Fig. 2: Simple Maze map manually created using the gmapping tool for ROS.

Fig. 3: Simulating SLAM for the Willow Garage Floor (Speed: 10x Simulation Time)

Fig. 4: Willow Garage map manually created using the gmapping tool for ROS.